The Naive Bayes Algorithm: Introduction to Probabilistic Machine Learning

The Naive Bayes Algorithm: Introduction to Probabilistic Machine Learning

Machine learning algorithms are an integral part of today’s technology-driven world. They are used in a wide range of applications, including natural language processing, image recognition, and autonomous systems. One such algorithm is the Naive Bayes algorithm, a popular probabilistic machine learning technique used for classification tasks.

In this blog post, we will introduce the Naive Bayes algorithm, explain how it works, and provide some examples of its applications. We will also discuss the mathematical principles behind the algorithm and how they relate to the geometry of changing beliefs.

What is Naive Bayes Algorithm?

The Naive Bayes algorithm is a machine learning technique based on Bayes theorem, which is a fundamental concept in probability theory. Bayes theorem describes the probability of an event occurring based on prior knowledge of related conditions. This theorem is based on conditional probability and is widely used in machine learning to make predictions based on evidence.

Here,

A and B are certain events

P(A) and P(B) are independent probabilities of events A and B

P(A|B) = probability of event A given event B

P(B|A) = probability of event B given A

The Naive Bayes algorithm is a type of supervised learning primarily used for classification tasks, where it learns to make predictions based on labeled data. The algorithm calculates the probabilities of different features to classify data into different categories. The “naive” aspect of the algorithm comes from the assumption that all the features are independent of each other.

The above formula is easily comprehensible for solving high school problems, but let’s see how we can apply it to our dataset. Suppose we have a dataset with features , …….. and target y.

This can be expressed as:

The denominator terms are the same for all classes and do not depend on the class label. To simplify the calculations, we can ignore the denominator when comparing the probabilities of different classes to make a prediction.

To create a classifier model using the Naive Bayes algorithm, we calculate the probability of a given input for all possible values of the class variable y. The resulting value ranges between 0 and 1. We select the output with the highest probability, as it is the most likely class given the input features.

Example of Naive Bayes Algorithm in Action

To illustrate how the Naive Bayes algorithm works, let’s consider an example of text classification. Suppose we have a dataset of emails labeled as “spam” or “not spam”. We want to train a Naive Bayes classifier that can predict whether a new email is spam or not.

We can represent each email as a set of features, such as the presence or absence of certain keywords or phrases. For example, the presence of the word “lottery” might be a strong indicator that an email is spam.

We can use a training dataset of labeled emails to calculate the probabilities of each feature for each category. For example, we can calculate the probability of the word “lottery” appearing in a spam email and the probability of it appearing in a non-spam email.

When a new email arrives, we can use these probabilities to calculate the probability of the email belonging to each category. We can then assign the email to the category with the highest probability.

The Geometry Of Changing Beliefs

Bayes theorem is a fundamental concept in probability theory that allows us to update beliefs based on new evidence. At the heart of Bayes theorem is the notion that evidence should update beliefs, not determine them.

The theorem is based on two types of probabilities: prior probability and posterior probability. The prior probability is our initial belief or probability before we receive any new evidence. In contrast, the posterior probability is the updated belief or probability after we have taken the new evidence into account.

Here,

P(H) = prior probability

P(H|E) = posterior probability

P(E|H) = probability of evidence being true given the hypothesis holds (likelihood)

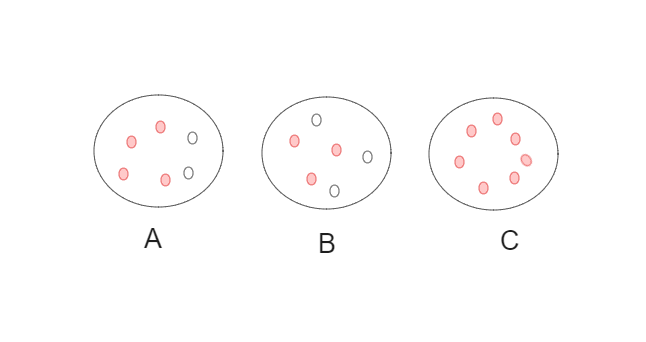

Bayesian updating is a process that can be represented geometrically using a probability space. The prior probabilities are represented as a point in this space, and the observed data is represented as a region corresponding to the new evidence. The updated probabilities are represented by a new point in the probability space.

In the context of Naive Bayes, we start with prior probabilities representing our beliefs about the likelihood of a data point belonging to each category. For example, if we’re classifying emails as spam or not spam, our prior probabilities might represent our initial beliefs about the likelihood of an email being spam or not. Then, when we observe new data (e.g., a new email), we use Bayes theorem to update our prior probabilities based on this new evidence. Specifically, we adjust our prior probabilities to take into account how likely the observed data is under each category. This adjustment causes the degree of belief (i.e., the probability) for each category to shift.

Applications of Naive Bayes Algorithm

The Naive Bayes algorithm has a wide range of applications in machine learning and beyond, owing to its high performance and quick learning ability, even with small datasets. Some of its most common applications include:

-

Text classification: The Naive Bayes algorithm is often used to classify text data, such as emails, news articles, or social media posts. The algorithm can be trained to recognize different categories of text, such as spam or non-spam emails, or positive or negative reviews.

-

Sentiment analysis: The Naive Bayes algorithm can also be used to perform sentiment analysis on text data. The algorithm can be trained to recognize the sentiment of a piece of text, such as whether it is positive, negative, or neutral.

-

Image classification: The Naive Bayes algorithm can also be used to classify images into different categories, such as different types of objects or scenes.

-

Medical diagnosis: The Naive Bayes algorithm can be used in medical diagnosis to predict the probability of a patient having a specific disease based on their symptoms and medical history.

Naive Bayes’ simplicity and speed make it a popular choice in many fields, including finance, engineering, and natural language processing. With its many applications and reliable performance, it’s no wonder that Naive Bayes remains a fundamental concept in machine learning and probability theory.

Conclusion

In conclusion, the Naive Bayes algorithm is a powerful and widely used probabilistic machine learning technique. It is based on Bayes’ theorem and the assumption of independence between features. The algorithm calculates the probability of each category based on the probabilities of the relevant features and assigns a data point to the category with the highest probability. This makes Naive Bayes a highly efficient and effective classifier for various applications, including text classification, sentiment analysis, image classification, and medical diagnosis. I hope this blog post has provided you with a clear understanding of the Naive Bayes algorithm and its practical applications in the field of machine learning.